Metascience Observatory Newsletter #1

Happy New Year from the Metascience Observatory! This newsletter will go out either bimonthly or quarterly. To sign up for the non-Substack version of our email list, click here.

After a few setbacks, we are now gearing up our efforts to build our AI pipelines. Here’s what we’ve built so far:

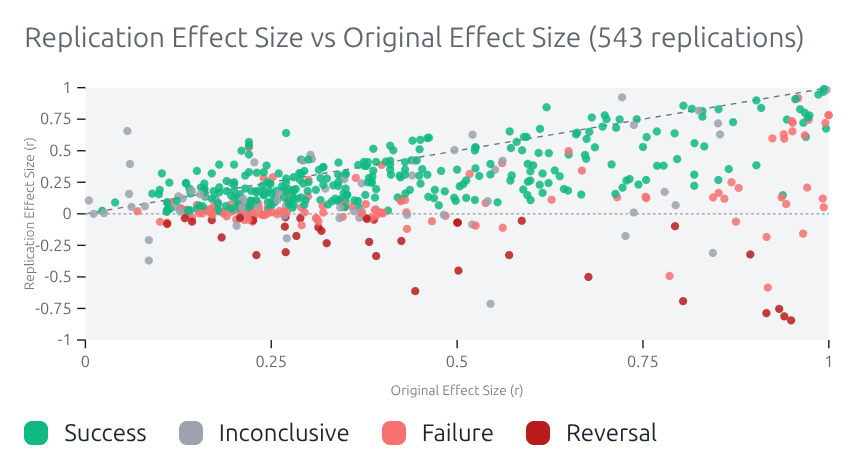

Replications database prototype

Our current database is ~90% human-curated, ~10% AI-curated. Currently it mostly covers psychology, but we’re working on expanding into biomedicine.

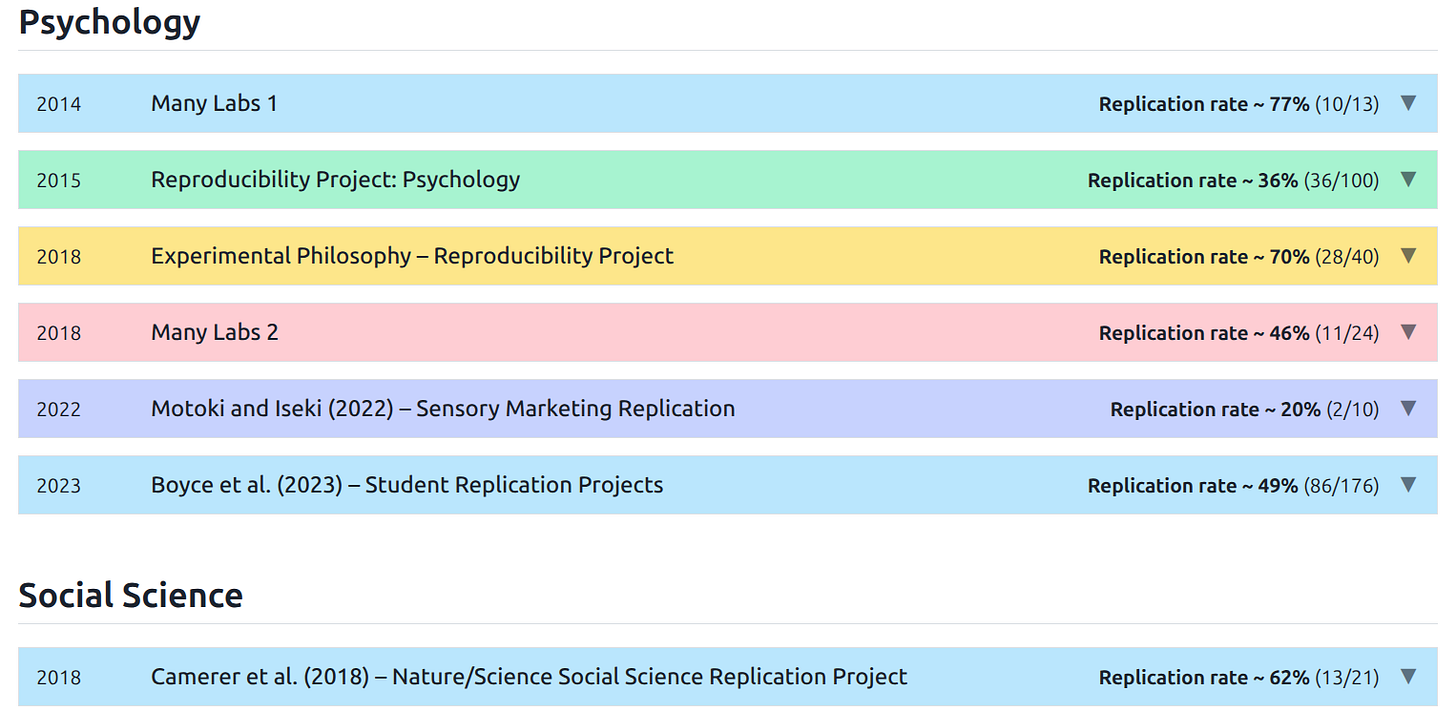

Summary of previous replication initiatives

This page summarizes previous replication projects that made progress quantifying reproducibility by doing the hard work of actually replicating experiments.

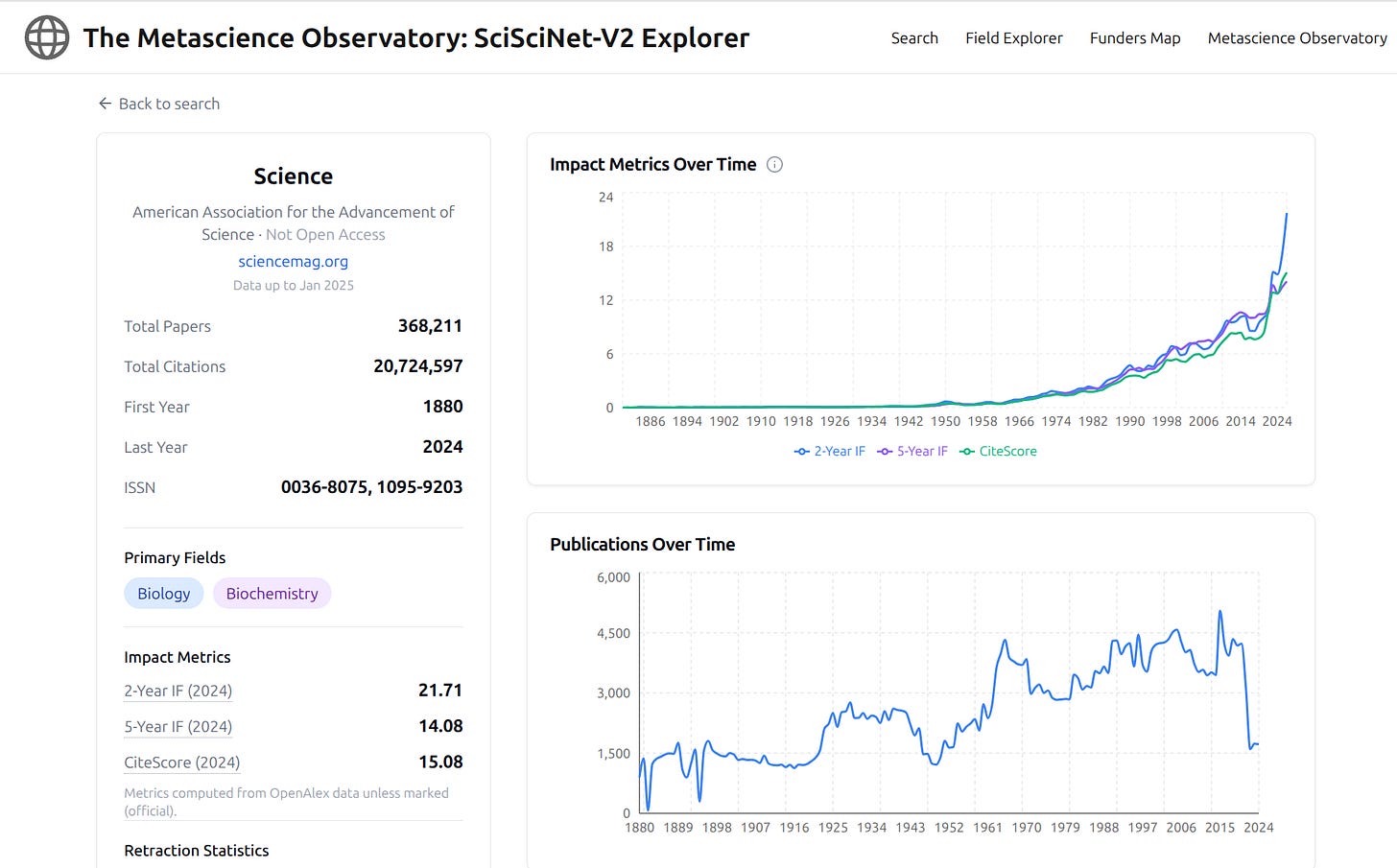

The Metascience Observatory Explorer

We built a prototype dashboard for exploring the open-source SciSciNet-V2 dataset, a massive dataset of journals, papers, patents, authors, institutions, funders, and all the linkages between them. The dataset comes from Dashun Wang‘s lab at Northwestern University, which also released SciSci-GPT recently as well. We’ve also integrated Scopus’s public journal metrics data, Retraction Watch’s retractions data, and integrated API calls to the NSF’s and NIH’s grant database APIs.

The resulting dashboard surfaces information on ~100 million researchers and 249 million papers, which more than paid services like Dimensions (~140M papers) and Web of Science (~100M papers). It be even be more comprehensive than the paid version of Scopus. The downside is there are probably more data issues than you’d get with paid services -- one thing we’ve noticed are occasional problems disambiguating authors with the same name and duplicate pages for the same author.

From the Metascience Observatory’s perspective, the most important feature of the Explorer will be the journals pages (example), where we hope to evaluate journals far beyond the usual “impact factor” type metrics. We plan to provide z-curve (p-hacking) analysis and reproducibility metrics for major journals.

On the funders map, you can see top funders by country and within each country you can rank them by metrics like citations per paper. If there are cool features you’d like to see on our Explorer please let us know!

A peek at our roadmap

Once we’ve built out our replications database further, we plan to train an AI model to predict whether a paper will replicate. We’re also developing a custom AI agent for forensic metascience and have ambitions to venture into automated meta-analysis.

We’re hiring!!

We are looking for part-time contractors and consultants to help us build our AI pipelines and stay on top of the fast-moving field of agentic AI. Experience with developing custom AI agents and running open-source LLMs locally is a big plus. We’re also looking for experts on meta-analysis. Both paid and volunteer roles are available. Get in touch with Dan Elton for more info (for security reasons, he’s not sharing his email here).

Recent metascience news of note

Dana-Farber settles suit alleging image manipulation for $15 million

HHS’s Office of Research Integrity published only two misconduct findings in 2025

Researchers petition for retraction of a Nature paper on AI-generated crystals that has over 1,000 citations

Andrew Ng’s group at Stanford releases a free AI tool for peer review